The AI Hiring Dilemma: Accuracy vs Explainability

AI has become your hiring department’s new best friend. From screening resumes to predicting candidate success, artificial intelligence is reshaping how you find and evaluate talent. The hiring process now moves faster, handles more applications, and supposedly makes better decisions than ever before.

Here’s where things get interesting: you’re facing a choice between a mysterious wizard who gets results but won’t reveal their methods, and an open book that shows you exactly how every decision gets made. This is the accuracy versus explainability debate that’s dividing the recruitment world.

The Power of Accurate AI

Accurate AI delivers impressive numbers—95% prediction rates, lightning-fast screening, and data-driven recommendations. Sounds perfect, right? The problem is you often can’t see why the AI chose one candidate over another. It’s a black box that spits out answers without showing its work.

This is where the role of recruiter tools comes into play. These tools can help in making the process more transparent by providing insights into the AI’s decision-making process.

Why Explainable AI Matters

Explainable AI takes a different approach. It opens up the decision-making process, showing you the reasoning behind each recommendation. You can trace every step, understand every factor, and verify that fairness guides the system.

Why explainable AI matters more than accurate AI in hiring comes down to trust, compliance, and accountability. You need to defend your hiring decisions to candidates, regulators, and your own team. A model that’s 98% accurate but can’t explain itself creates more problems than it solves. AI transparency isn’t just nice to have—it’s essential for building a hiring process that actually works for everyone involved.

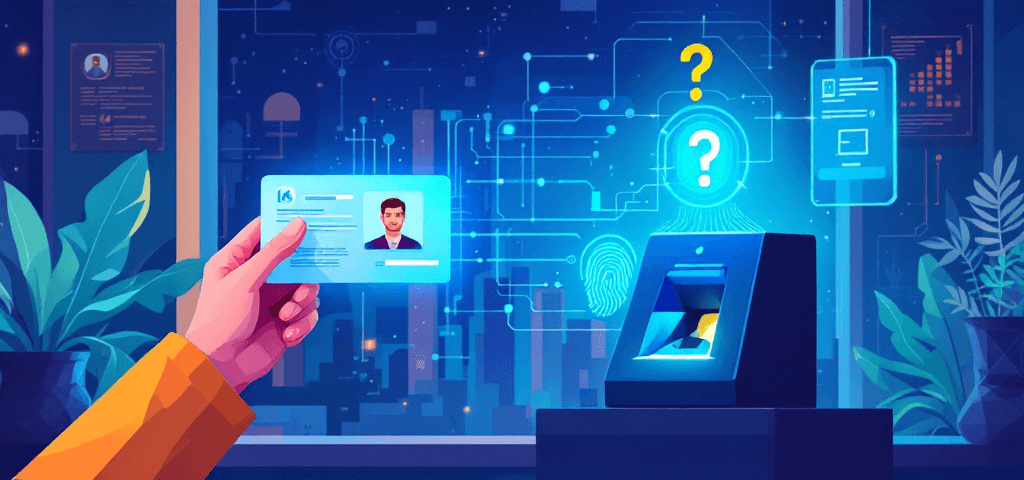

Addressing Fraud Identity in Hiring

Moreover, as we delve deeper into this subject, it’s crucial to consider the implications of fraud identity in the hiring process. With the rise of digital applications, ensuring the authenticity of candidate information becomes paramount.

The Role of SME Expertise

In addition, we must acknowledge the importance of SME expertise in leveraging these technologies effectively. Subject Matter Experts play a vital role in understanding and implementing AI tools in a way that enhances their effectiveness while maintaining ethical standards.

Overcoming Challenges with Design and Structure

Lastly, we cannot ignore the fact that without proper design and structure in place, organizations may face challenges in integrating AI successfully. This concept is well articulated in our piece on entropy in AI and organizations, which emphasizes the need for strategic planning when incorporating advanced technologies into existing systems.

Understanding Explainable AI (XAI)

Explainable AI is artificial intelligence that shows its work—think of it as the difference between a student who can explain how they solved a math problem versus one who just writes down the answer. When you implement explainable AI in your hiring process, you’re choosing systems that reveal why a candidate received a particular score or recommendation, not just what that score is.

The Problem with Traditional AI Models

Traditional “black box” AI models operate like secret recipes locked in a vault. You feed in resumes and assessments, and out comes a ranking or decision. The problem? You can’t peek inside to see which ingredients influenced the outcome. Did the AI favor candidates from certain universities? Did it penalize career gaps unfairly? You simply don’t know.

How XAI Changes the Game

Machine learning transparency changes this dynamic through XAI’s core components:

- Bias detection – Identifies when your AI develops preferences based on protected characteristics or irrelevant factors

- Fairness – Ensures equal treatment across different candidate demographics and backgrounds

- Transparency – Reveals which qualifications and attributes influenced each decision

- Traceability – Creates an audit trail showing exactly how the AI reached its conclusions

The Benefits of XAI

The XAI benefits extend beyond technical compliance. You gain the ability to validate that your AI hiring assistant actually evaluates candidates on merit, skills, and relevant experience—the criteria you intended it to use, not patterns it discovered in potentially biased historical data.

For instance, with the help of language proficiency tests powered by AI, you can avoid common language testing mistakes that often skew hiring results. This ensures that your recruitment process is not only efficient but also fair and transparent.

Moreover, implementing hybrid processes that combine traditional methods with advanced AI techniques can significantly improve data quality. This leads to more reliable outcomes and better overall performance of your AI systems.

Lastly, for those looking for a comprehensive approach to adopting these technologies, a step-by-step guide can provide valuable insights into successfully navigating this transition.

The Risks of Relying Solely on Accurate AI in Hiring

You might have an AI model that boasts 95% accuracy in predicting successful hires. Impressive, right? But here’s the catch: high accuracy doesn’t automatically translate to trust or fairness. Your recruitment AI could be making spot-on predictions while simultaneously perpetuating harmful biases you can’t even see.

Understanding Black Box Models and Their Risks

Black box models present serious hiring risks that go beyond simple miscalculations. When your AI rejects a candidate, can you explain why? If the answer is no, you’re essentially trusting a fortune cookie without knowing the ingredients. That candidate might be the perfect fit, but your opaque system filtered them out based on patterns it learned from historically biased data. This lack of transparency in AI decision-making can lead to significant consequences.

Real-World Limitations of Accurate AI

The accurate AI limitations become painfully clear in real-world scenarios:

- Hidden biases lurking in training data get amplified without detection

- Unexplainable rejections leave candidates frustrated and your company vulnerable to discrimination claims

- Pattern recognition that correlates with protected characteristics (age, gender, ethnicity) without your knowledge

- False confidence in decisions you can’t verify or defend

The Dangers of Solely Focusing on Accuracy

When you rely solely on accuracy metrics, you’re playing a dangerous game. Your AI might consistently pick candidates who “look like” your current workforce—not because they’re the best qualified, but because the model learned to replicate existing patterns, biases and all.

Moreover, this over-reliance on accuracy can lead to significant legal risks, especially if your hiring practices are challenged due to perceived discrimination. It’s crucial to implement strategies that not only focus on accuracy but also consider time savings through more efficient and fair hiring processes.

Conclusion

While accurate AI can significantly improve efficiency and save time in the hiring process, it’s essential to remain vigilant about its limitations. Understanding these challenges and implementing strategies to mitigate them can help create a more equitable hiring process.

Why Explainability Takes the Lead in Hiring Decisions

Trust is the foundation of every successful hire. When candidates understand why they were selected or rejected, they’re 73% more likely to view your company favorably—even if they didn’t get the job. Explainability transforms AI from a mysterious gatekeeper into a transparent partner in the recruitment process.

Regulatory Accountability

Regulatory frameworks aren’t just catching up to AI—they’re demanding accountability. The EEOC’s guidelines on algorithmic hiring require employers to explain automated decisions that impact candidates. Without XAI importance embedded in your systems, you’re navigating a legal minefield blindfolded. Companies using opaque AI models face increasing scrutiny and potential discrimination lawsuits that could cost millions.

Bias Detection

Explainability acts as your early warning system for bias. Consider this real scenario: A major tech company discovered their AI was penalizing resumes containing the word “women’s” (as in “women’s chess club”). An explainable model would have flagged this pattern immediately. Instead, they only caught it after months of skewed hiring data. XAI tools reveal these problematic correlations before they damage your talent pipeline and reputation.

Ethical Hiring Practices

Ethical hiring practices demand you can answer one simple question: “Why this candidate?” Black box models leave you shrugging. Explainable AI gives you concrete, defensible answers that satisfy candidates, regulators, and your own hiring managers who need transparency in recruitment to make informed decisions. Incorporating decision scorecards into your process can further enhance this transparency by providing clear reasoning behind each decision made during the hiring process.

Fairness and Accountability Through the Lens of Explainable AI

Ethical AI hiring transforms from buzzword to reality when you implement explainable systems. XAI acts as your built-in ethics engine, revealing when algorithms drift toward discriminatory patterns. You can spot if your AI favors candidates from specific universities or penalizes career gaps that disproportionately affect certain demographics. This visibility lets you course-correct before biased decisions compound into systemic problems.

The accountability question haunts every organization using AI for recruitment: when your algorithm rejects a qualified candidate, who answers for it? With black-box systems, responsibility dissolves into technical obscurity. XAI changes this dynamic entirely. You can trace decisions back to specific data points and logic pathways, establishing clear accountability mechanisms that protect both your organization and candidates.

Think of explainable AI as having a referee who shows every foul on replay. You’re not just getting a call—you’re seeing exactly what happened, when it happened, and why the whistle blew. This transparency matters when candidates challenge decisions or regulators investigate your hiring practices.

Fairness in recruitment demands more than good intentions. You need systems that document their reasoning, expose their limitations, and allow human oversight at critical junctures. XAI provides this framework, turning your hiring AI from an inscrutable judge into a transparent partner you can verify, validate, and trust.

Moreover, the future of AI in recruitment isn’t just about making smarter decisions; it’s about making safer ones. Implementing such robust systems not only enhances fairness but also ensures accountability in all hiring processes.

While AI won’t revolutionize hiring, it will certainly streamline the process significantly. From AI interviews to automated screening, these tools are designed to save time while ensuring a fair assessment of all candidates.

The Practical Advantages of Explainable AI for Everyone Involved in the Hiring Process

Hiring transparency benefits reach every corner of the recruitment ecosystem. When candidates receive clear explanations about why they didn’t advance—specific skill gaps, experience mismatches, or qualification requirements—they walk away with actionable insights rather than frustration. This feedback clarity transforms rejection from a dead end into a development opportunity. You’ve probably experienced the black hole of application silence yourself; explainable AI eliminates that void.

To enhance the [candidate experience](https://sagescreen.io/tag/candidate-experience), it’s crucial to provide constructive feedback. This is where explainable AI shines, offering insights that candidates can use for their future applications.

Recruiter confidence skyrockets when you can see exactly how your AI screening tool evaluates candidates. Instead of blindly accepting algorithmic recommendations, you gain the ability to:

- Verify that scoring aligns with actual job requirements

- Identify when the model weights certain qualifications too heavily or too lightly

- Adjust parameters based on real-world hiring outcomes

- Defend hiring decisions with concrete, documented reasoning

The audit trail becomes your safety net. When a hiring manager questions why a particular candidate was filtered out, you don’t shrug and blame “the algorithm.” You point to specific, understandable criteria.

Moreover, implementing [dynamic assessments](https://sagescreen.io/tag/dynamic-assessments) through AI can significantly improve the quality of candidate evaluation. These assessments adapt to each candidate’s unique skills and experiences, providing a more accurate representation of their suitability for the role.

Candidate experience improves dramatically when applicants understand the process isn’t arbitrary. They see that your organization values transparency and fairness, which strengthens your employer brand even among those you don’t hire.

If you’re ready to bring this level of clarity to your hiring process with SageScreen’s explainable AI solutions, you can demystify every screening decision and ensure a fairer recruitment process for all parties involved.

Navigating Compliance and Risk Management with Ease Using XAI

Hiring compliance isn’t just a buzzword—it’s a legal minefield you need to navigate carefully. The Equal Employment Opportunity Commission (EEOC) has made it clear that employers must ensure their selection procedures don’t discriminate against protected classes. When you’re using AI in recruitment, the burden of proof falls on you to demonstrate fairness and transparency in your decision-making process.

Here’s where explainable AI becomes your compliance safety net:

- EEOC Guidelines: These require employers to validate that their hiring tools don’t create adverse impact. XAI lets you document exactly how decisions are made, providing the audit trail regulators demand.

- GDPR’s Right to Explanation: If you’re hiring in Europe or dealing with EU citizens, you’re legally obligated to explain automated decisions. Black box AI simply can’t meet this standard.

- State-Level AI Laws: New York City’s Local Law 144 and similar regulations mandate bias audits and transparency notices. XAI makes compliance straightforward rather than a scramble.

Risk mitigation in recruitment goes beyond avoiding lawsuits. You’re protecting your employer brand, reducing costly mis-hires, and building a defensible hiring process. When an applicant questions why they weren’t selected, explainable AI gives you concrete, documented reasons rather than algorithmic shrugs.

SageScreen’s transparent approach to AI-driven screening helps you stay ahead of legal requirements while maintaining hiring efficiency. This commitment to transparency aligns perfectly with the upcoming trends in recruitment as outlined in our article on the transformation of recruiting agencies by 2025, emphasizing the need for lean screening expertise in the evolving landscape.

SageScreen’s Commitment to Championing Explainable AI in Hiring

SageScreen has positioned itself at the forefront of transparent hiring tools by building explainability into every layer of its screening platform. Unlike traditional recruitment software that leaves you guessing about candidate evaluations, SageScreen opens the curtain on how decisions are made.

The platform’s SageScreen XAI features deliver what hiring teams actually need:

- Complete traceability – Every candidate assessment comes with a clear explanation of which factors influenced the evaluation and why

- Built-in fairness checks – Automated bias detection runs continuously, flagging potential issues before they affect your hiring decisions

- User-friendly explanations – No PhD in data science required; the platform translates complex AI reasoning into plain language that both recruiters and candidates can understand

- Real-time audit trails – Track how the AI evaluates candidates across different demographics to ensure consistent, equitable treatment

This ethical recruitment software doesn’t just claim to be fair—it shows you the receipts. You can see exactly how the AI weighs different qualifications, skills, and experiences. When a candidate asks why they weren’t selected, you have concrete, defensible answers instead of algorithmic shrugs.

Ready to bring transparency to your hiring process? Try SageScreen today and experience recruitment without the mystery—just clear, explainable decisions that you can stand behind with confidence. If you’re interested in understanding more about how to utilize our platform effectively, or want a detailed walkthrough of our features, we’ve got you covered. Our commitment extends beyond just providing a tool; we also ensure interview integrity and maintain an ethical standard in all our recruitment processes.

Striking the Right Balance Between Accuracy and Explainability – The Ideal Approach to Hiring

You don’t have to choose between a model that’s accurate and one that’s explainable—you need both working in harmony. Think of it like crafting the perfect cocktail: accuracy is the spirit that gives your drink its kick, while explainability is the mixer that makes it smooth, palatable, and enjoyable. Without the spirit, you’re just drinking juice. Without the mixer, you’re choking down something harsh that nobody wants to experience.

A balanced AI approach delivers:

- Predictive power that identifies top candidates with precision

- Transparent reasoning that shows exactly why each decision was made

- Trust-building clarity that satisfies candidates, recruiters, and legal teams alike

The best hiring practices don’t sacrifice one for the other. You want accurate and explainable models working together to create a recruitment process that’s both effective and defensible. When your AI can pinpoint the right candidate and articulate the specific qualifications, experiences, and attributes that led to that recommendation, you’ve achieved the sweet spot.

SageScreen’s platform demonstrates this balanced approach in action, combining sophisticated predictive algorithms with clear, traceable explanations for every screening decision. You get the accuracy you need to build winning teams paired with the transparency that protects your organization and respects your candidates. The SageScreen platform exemplifies this ideal by seamlessly integrating predictive power with transparent reasoning.

Embracing Transparency as the Key to Successful Hiring

Transparency isn’t just a buzzword—it’s the foundation of trust in modern recruitment. When you prioritize explainability in your hiring AI, you create an environment where candidates feel respected and recruiters feel confident. The transparent hiring benefits extend beyond compliance checkboxes; they transform your entire talent acquisition strategy into something candidates actually want to engage with.

Think about the future of ethical recruitment. Companies that embrace explainable AI today are building reputations that attract top talent tomorrow. Candidates talk. They share their experiences. When your rejection emails come with clear, understandable reasoning instead of generic templates, you’re not just being fair—you’re being memorable for the right reasons.

Why Explainable AI Matters More Than Accurate AI in Hiring boils down to this simple truth: people need to understand the “why” behind decisions that affect their careers. An accurate model that no one trusts is like a locked treasure chest—impressive but useless.

SageScreen stands ready as your trusted partner in this transparency revolution. We’ve built our platform specifically to make your hiring process crystal clear, giving you the power to explain every decision while maintaining the efficiency you need. You deserve hiring technology that respects both your intelligence and your candidates’ dignity—and that’s exactly what explainable AI delivers.