Most companies mess this up. They attach AI to broken processes, call it innovation, and wonder why nobody trusts the results. The algorithm becomes the scapegoat for decisions that were already inconsistent.

AI in hiring processes includes resume screening, interview scheduling, candidate assessment, and predictive analytics. When done correctly, it eliminates the unpredictability that damages trust: the hiring manager who changes criteria mid-search, the interviewer who asks different questions to every candidate, the gut-feel decision disguised as judgment.

The change is significant. AI recruiting adoption jumped from experimental to standard practice across Fortune 500 companies in less than three years. Candidates increasingly prefer structured AI assessments over inconsistent human interviews. They trust the machine more than they trust your bias.

The question isn’t whether to use AI. It’s whether you’re using it in ways that build or destroy credibility.

The Role of AI in Modern Recruiting

AI recruiting tools have become an essential part of the hiring process, playing a crucial role at every stage. They are no longer just helpful tools; they have become the foundation of recruitment.

How AI is Transforming Recruitment

Here’s how AI is making a difference in recruitment:

- Optimizing Job Descriptions: AI analyzes job descriptions and suggests changes to remove any gender bias, making them more inclusive and appealing to a wider range of candidates.

- Targeting Passive Candidates: Using predictive algorithms, marketing campaigns can now identify individuals who are likely to switch jobs and target them with personalized messages.

- Streamlining Candidate Screening: With AI, the process of reviewing resumes has become faster and more efficient. Resumes are automatically scanned, ranked, and filtered based on predefined criteria, saving recruiters valuable time.

- Automating Initial Interactions: Chatbots are being used to handle basic inquiries from candidates, schedule interviews, and gather initial information, freeing up recruiters to focus on more complex tasks.

The Benefits of AI in Recruitment

The benefits of using AI in recruitment are clear:

- Time Savings: By automating repetitive tasks such as resume screening and initial candidate interactions, recruiters can spend less time on administrative work and more time on strategic decision-making.

- Consistency: Unlike humans, who may be influenced by biases or personal preferences, AI applies the same set of criteria to every candidate profile consistently. This helps ensure fairer evaluations and reduces the risk of discrimination.

- Data-Driven Insights: AI has the ability to analyze large volumes of data quickly and accurately. It can identify patterns and correlations that may not be immediately obvious to human recruiters, leading to better hiring decisions.

The Responsible Use of AI in Recruitment

While the potential benefits of AI in recruitment are significant, it is important to approach its implementation with caution. Here are some key considerations:

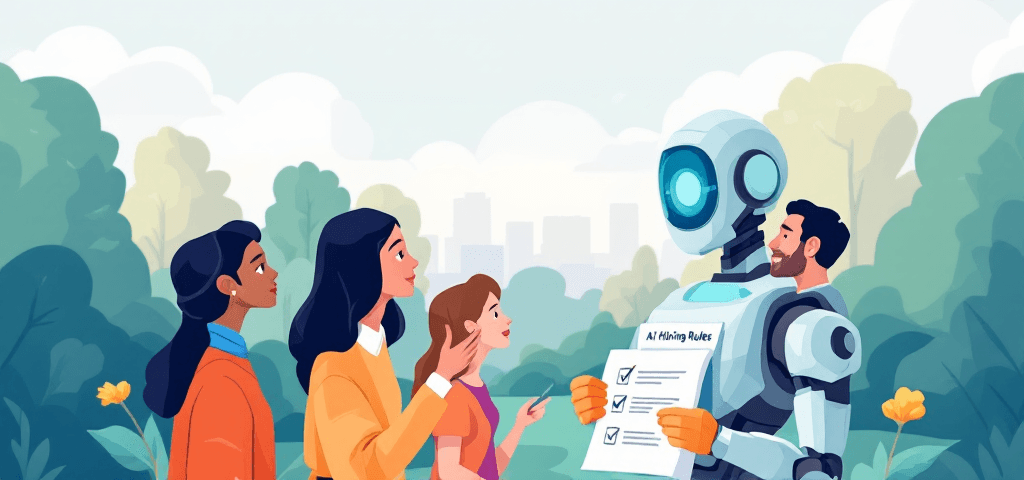

- Bias Mitigation: AI systems learn from historical data, which means they can inadvertently perpetuate existing biases if not carefully monitored. It is crucial to regularly audit algorithms and ensure they are not discriminating against certain groups.

- Transparency: Candidates should be informed when AI is being used in the hiring process. This promotes transparency and builds trust between employers and applicants.

- Human Oversight: While AI can assist in decision-making, it should not replace human judgment entirely. Recruiters should always have the final say in hiring decisions based on their expertise and understanding of organizational culture.

The question isn’t whether AI belongs in recruiting—it’s already there. The real question is whether it’s being used responsibly.

Building Trust Through Transparency and Ethics

Transparency in AI hiring starts with telling candidates the truth: an algorithm is screening them. Companies that hide AI involvement create distrust the moment it’s discovered. HR teams need the same clarity—if they don’t understand how the system scores candidates, they won’t defend its decisions.

Ethical AI recruitment demands written guidelines before deployment, not after a lawsuit. Define what the AI can evaluate, what it can’t, and who’s accountable when it fails. Policies without enforcement are theater.

Bias mitigation requires regular audits, not annual check-ins. Run statistical analyses on hiring outcomes by protected class. If your AI screens out qualified candidates from specific demographics at disproportionate rates, your model is broken. Most companies audit once during implementation, then assume the problem stays solved. Bias drifts as data changes. Audit quarterly or accept that you’re flying blind.

The gap between claiming ethical AI and proving it separates credible systems from compliance theater.

Balancing AI Insights with Human Judgment

While AI can’t understand human emotions or context, it analyzes data, identifies patterns, and evaluates responses based on predefined criteria. However, it cannot perceive subtle cues like nervousness or brilliance, nor can it grasp the strategic reasoning behind unconventional answers. While humans provide context and understanding, AI offers objectivity and uniformity.

The predictable pitfalls:

- Relying solely on AI leads to a lack of understanding, an overemphasis on specific words or phrases, and penalties for candidates who do not communicate in a way that the algorithm understands.

- Relying solely on humans introduces biases, potential burnout after multiple interviews, and inconsistent decisions on similar candidates based on personal emotions or moods.

The hybrid recruitment strategy is effective because it addresses the weaknesses of both AI and humans. AI takes care of the systematic tasks such as checking qualifications, evaluating skills, and highlighting potential issues. On the other hand, humans make subjective decisions by assessing cultural compatibility, considering trade-offs, and deciding when to disregard the data.

How companies are using AI in recruiting to actually increase trust:

They delegate the repetitive tasks to AI while keeping humans as the ultimate decision-makers. SageScreen’s specially designed interviewers ensure that the initial screening process is consistent and every candidate is evaluated based on the same criteria. Subsequently, hiring managers review the structured results and make the final decision. The collaboration between humans and AI in this process does not aim to eliminate human judgment but rather to enhance it with more accurate and reliable information.

This human-AI collaboration is not just limited to recruitment; it’s a model that can be applied across various sectors where user research is involved. By leveraging AI’s strengths while retaining human oversight, organizations can achieve more efficient and trustworthy outcomes.

Increasing Candidate Confidence in AI Hiring

Candidates don’t trust what they can’t see. 67% of job seekers believe AI reduces racial and ethnic bias in hiring decisions compared to traditional methods. That number matters because it signals a shift: people recognize that human gatekeepers carry their own prejudices, often invisible and unchecked.

Structured screening builds candidate trust in AI by removing ambiguity. When every applicant faces the same structure, scored against the same criteria, the process becomes defensible. Candidates know they weren’t dismissed because someone “didn’t feel right” about their background or accent. They were evaluated on competencies that map directly to job requirements.

Reducing bias with AI requires validation at every step. Algorithms trained on diverse datasets and audited for disparate impact create fairer outcomes than resume skimming or unstructured phone screens. This isn’t theoretical. Companies using validated AI screening report higher candidate satisfaction scores because applicants perceive the process as merit-based rather than arbitrary.

The candidate experience improves when screening feels consistent and transparent. No ghosting. No black holes. Just clear expectations and measurable performance.

Addressing Concerns Around AI in Hiring

Job displacement fears dominate the conversation, but they miss the point. AI doesn’t replace recruiters—it eliminates the grunt work that keeps them from doing actual recruiting. The panic around automation stems from misinformation about

The real concern isn’t that AI will take jobs. It’s that companies will deploy it badly—using black-box algorithms without oversight, or worse, treating AI recommendations as gospel instead of input. When candidates worry about AI, they’re worried about opacity and lack of recourse. They want to know: Who’s accountable when the system gets it wrong?

The answer separates responsible adoption from reckless deployment. Systems built with clear scoring criteria, human review loops, and audit trails don’t threaten fairness—they enforce it. The fear isn’t irrational. The response is.

Productivity Gains from Responsible AI Adoption

Automating manual tasks boosts HR productivity by 63%. That’s not a small improvement—it’s a complete transformation. Resume screening, interview scheduling, initial candidate evaluation: these aren’t strategic tasks. They’re necessary obstacles that AI removes.

The productivity boost with AI comes from getting back time that was previously wasted on administrative tasks. Recruiters spend less time going through applications and more time on things that machines can’t do: building relationships, assessing cultural fit, closing candidates.

Benefits of Automation in HR

Here are some ways automation benefits HR:

- Creating consistency at scale: One recruiter can evaluate 200 candidates with the same rigor they’d apply to 20. The quality doesn’t degrade. The criteria don’t drift.

- Handling volume without collapsing processes: The process doesn’t break down under high numbers.

How companies are using AI in recruiting to actually increase trust starts here: when teams have enough time to be thorough, candidates have better experiences. When decisions happen faster without cutting corners, hiring managers trust the process. Speed and quality stop being trade-offs.

Best Practices for Building Trust in AI Hiring Solutions Like SageScreen

Trust doesn’t come from marketing copy. It comes from what you build into the system before anyone ever uses it.

1. Start with diverse training data recruitment

If your AI learns from homogeneous datasets, it will replicate the same narrow patterns that created bias in the first place. SageScreen trains on candidate pools that reflect actual workforce diversity across industries, geographies, and backgrounds. This isn’t about checking boxes. It’s about teaching the model what good looks like across the full spectrum of human capability, not just the subset that’s historically had access.

2. Embed legal compliance recruitment technology from day one

Ethical oversight isn’t a quarterly review. It’s baked into the architecture. SageScreen’s system operates under continuous monitoring against anti-discrimination laws, EEOC guidelines, and evolving regulatory standards. When compliance becomes structural rather than procedural, you stop playing defense and start building systems candidates can actually trust.

3. Communicate what the AI does and what it doesn’t

Candidates distrust black boxes. SageScreen’s trusted solutions for ai hiring work because they’re explicit about scope: the AI conducts structured interviews, scores responses against validated criteria, and surfaces patterns humans might miss. It doesn’t make final hiring decisions. It doesn’t replace judgment. It removes noise so your team can focus on signal.

The companies that earn trust don’t hide behind complexity. They expose their methodology, acknowledge limitations, and let the work speak. SageScreen’s approach is simple: structured inputs, validated scoring, transparent outputs. No magic. No mystery. Just disciplined evaluation at scale.

Call to Action: Explore Tailored SageScreen Solutions for Trusted Hiring Outcomes

Companies are using AI in recruiting to actually increase trust by replacing inconsistent screening methods with validated, structured processes. SageScreen’s purpose-built interviewers deliver exactly that: clarity at scale without sacrificing accuracy or control.

You get:

- Structured evaluations that eliminate gut-feel hiring

- Scored assessments that create defensible decisions

- Validated outcomes that stand up to scrutiny

The trusted AI interviewing platform doesn’t ask you to choose between speed and rigor. SageScreen tailored hiring solutions give you both disciplined screening that cuts through noise while maintaining the transparency candidates and compliance teams demand.